Understanding the SwiGLU FeedForward Layers

Last updated 4 months

Exploring OG FeedForward Layers

In the original FeedForward layers of transformers, the tokens of the input are projected up to a higher dimension, usually , for the transformer blocks to learn useful computations to apply on the outputs of the attention layers. This is done through a GEMM (General Matrix Multiplication) via a Linear layer which can be represented as .

At the time when transformers were first released, the activation function used for this was the Gaussian Error Linear Unit (GeLU) activation function which also featured an inherent Self-Gating mechanism in its expression.

The reason GeLU fell out of favour compared to SiLU was because it was more difficult to optimise on GPU’s than SiLU and actually was a little bit worse with gradient flow.

Sigmoid Linear Units (SiLU)

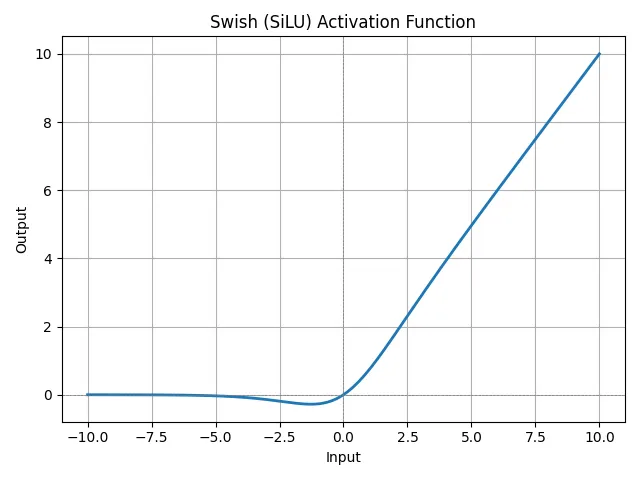

The Sigmoid Linear Unit (SiLU) activation function (also called the Swish due to its shape) is the most popular activation function in Deep Learning today and it is calculated as the input pre-activation multiplied by a sigmoid of the pre-activation.

This can also be thought of as the sigmoid activation weighted by the pre-activation.

The SiLU activation function has been popular in transformers from the beginning because of the following characteristics.

- Continuous: Is smooth all the way through (e.g. ReLU has a manually defined gradient at ). This gives much smoother gradient updates for more stable training and helps with convergence.

- Non-zero gradient all around: SiLU still outputs small negative values for negative numbers and keeps gradients flowing. ReLU’s for example would “kill” neurons with zero gradients sometimes if they had negative activations too many times.

- Self-Gating mechanism: The low pre-activation values get suppressed while high values get boosted. The SiLU creates approximately linear activation when the pre-activations are positive but increasingly low activations when the pre-activations are weak or negative. That means SiLU inherently weighs when to activate and when to dampen a neuron’s output based on its pre-activation value- making it adaptive.

The Self-Gating mechanism is the core part that makes SiLU effective. For small or negative values of , the activation function weighs it down softly and for positive or large values of , the activation function boosts it gently. This way, neurons that should have less of an impact get a lower activation weighting while important ones get a higher weighting.

And you know how we can make the gating mechanism even more adaptive and effective? Well, we give the SiLU its own learnable parameters to decide how much it should gate the output of each neuron!

This is where the idea of SwiGLU FeedForward layers (Swish + Gated Linear Unit) come in.

Towards Computational Efficiency

SwiGLU is less of an activation function and more of a re-implementation of the FeedForward layer with a learnable gating mechanism and the SiLU activation function.

SwiGLU still performs the same linear operation to project the tokens of the input up to the same dimension, but it then splits this resulting tensor into two separate tensors, and , each with the tokens in the dimensional space.

After the splitting the effectively turn the operation into and .

From there, is passed through the SiLU activation function to get a set of activations.

These activations are then multiplied element-wise by into the resulting activations as a form of gating mechanism.

Effectively, this means that the weights are new learnable gating weights that are applied to each activation, in addition to the gating mechanism that is already inherent in the SiLU function.

This SwiGLU FeedForward layer is not only more computationally efficient from simpler tensor parallelism (read the post bro I promise its worth it), but it actually also gives better performance than the regular FeedForward layer with GeLU as well.

Implementation of SwiGLU

Here's a basic implementation of SwiGLU in Python.

import torch

import torch.nn as nn

class FeedForward(nn.Module):

"""

Implements the SwiGLU feedforward layer with a SiLU non-linearity.

"""

def __init__(self, n_embd) -> None:

super().__init__()

self.n_embd = n_embd

# Projects up to higher dim the same as in regular feedforwards

self.proj_up = nn.Linear(n_embd, 4 * n_embd)

# SiLU activation function

self.silu = nn.SiLU()

# Projects back down to the original dimension

self.proj_down = nn.Linear(4 * n_embd, n_embd)

def forward(self, x: torch.Tensor):

n_embd = self.n_embd

# Splits the projected tensor into two halves

x_1, x_2 = self.proj_up(x).split(2 * n_embd, dim=2)

# Applies the SiLU activation function to the first half

# and element-wise multiplies it with the second half

x = self.silu(x_1) * x_2

# Projects back down to the original dimension for next layer

return self.proj_down(x)